## API Design Basics

Start with these foundational concepts to build a solid API.

## Advanced Considerations

Once you've mastered the basics, these topics will help you build more sophisticated APIs.

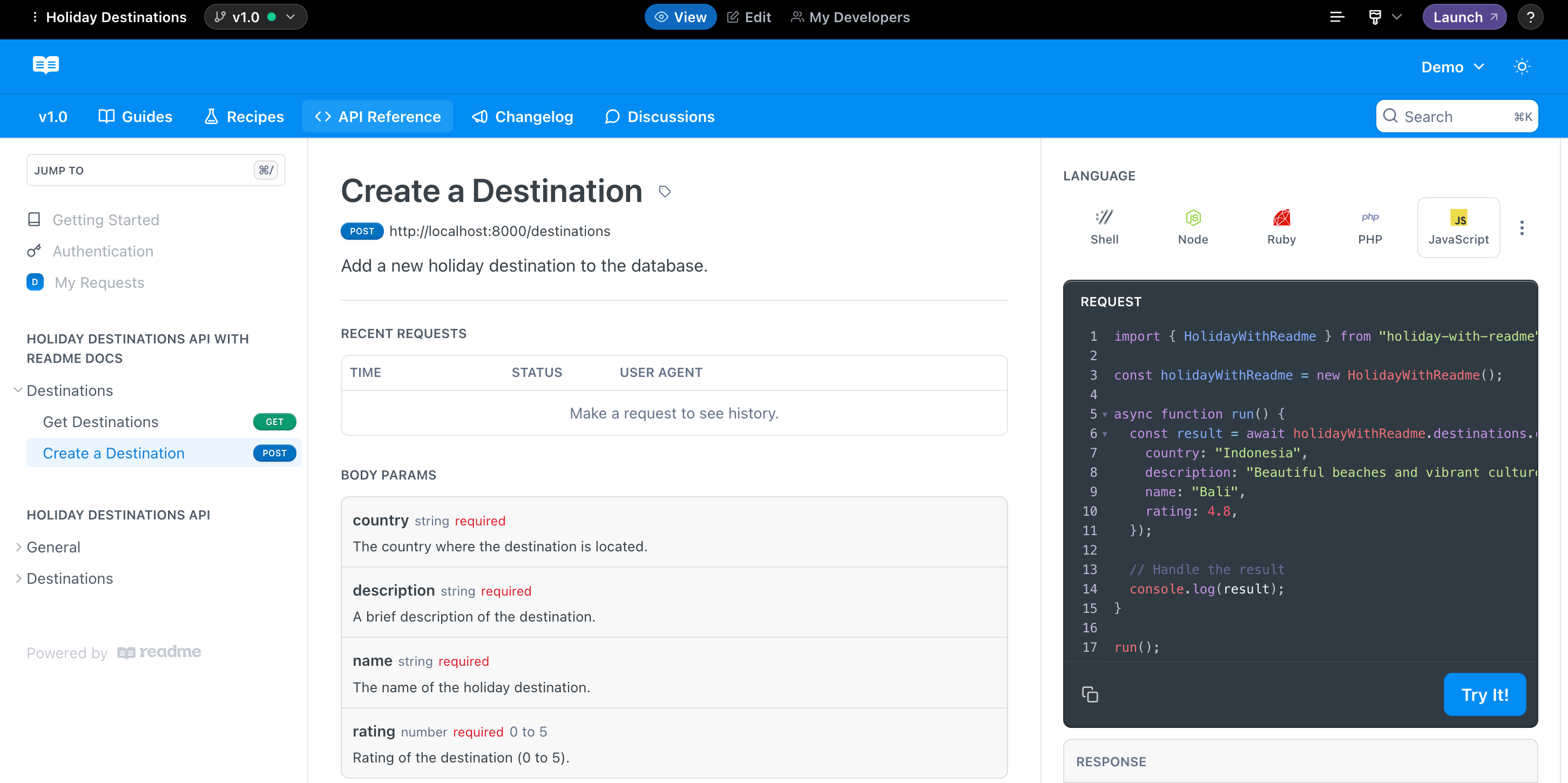

## Productizing APIs

Turn your API into a polished product with these guides on documentation, security, and more.

Render bytes as [Secret Key] API->>Authorization Server: Store [Bob, [Secret Key]] Authorization Server-->>API: Success API-->>API Consumer[Bob]: [Secret Key] end rect rgba(0, 0, 0, 0.05) note over API Consumer[Bob],Authorization Server: Authorized API Request API Consumer[Bob]->>API: [Req] with [Secret Key] API->>Authorization Server: Who is this? [Secret Key] Authorization Server-->>API: Bob Note right of API: Process Request under context Bob API-->>API Consumer[Bob]: [Resp] end ``` * **Time to API Call**: As Fast as it gets. * **Ease of API Consumer Integration**: Very Easy * **Ease of API Producer Implementation**: Very Easy * **Other Considerations**: * Often Difficult to integrate into an API Gateway * Any validation requires a lookup where-ever these are stored. If you solely store them in the DB, this means that all lookups require a DB Read, which can cause additional latency to every request. ### OAuth 2.0 Secrets ```mermaid sequenceDiagram participant API Consumer[Bob] participant API participant Authorization Server rect rgba(0, 0, 0, 0.05) note over API Consumer[Bob],Authorization Server: Configure Key API Consumer[Bob]-->>API: AUTHORIZED SESSION [Bob] with [API] API-->>API Consumer[Bob]: API Consumer[Bob]->>API: Request New oAuth Application Note left of API: Generate some random bytes.

Render bytes as [Client ID], [Client Secret]

(AKA Secret Key). API->>Authorization Server: [Bob, [Secret Key]] Authorization Server-->>API: Success API-->>API Consumer[Bob]: [Secret Key] end rect rgba(0, 0, 0, 0.05) note over API Consumer[Bob],Authorization Server: Authorized API Request API Consumer[Bob]-->>Authorization Server: SECURE SESSION [Unknown] with [Authorization Server] Authorization Server-->>API Consumer[Bob]: API Consumer[Bob]->>Authorization Server: GET /oauth/token with [Secret Key] Note left of Authorization Server: Lookup [Secret Key] as Bob

Generate short-lived JWT with {"sub":"Bob"} claims Authorization Server-->>API Consumer[Bob]: JWT["Bob"] API Consumer[Bob]-->>API: SECURE SESSION [Unknown] with [API] API-->>API Consumer[Bob]: API Consumer[Bob]->>API: [Req] with header "Authorization Bearer \${JWT["Bob"]}" Note left of API: Process Request under context Bob API-->>API Consumer[Bob]: [Resp] end ``` OAuth is commonly associated with user authentication through a social login. This doesn't make sense for API applications, as the system authenticates and authorizes an application rather than a user. However, through the Client Credentials Flow ([OAuth 2.0 RFC 6749, section 4.4](https://tools.ietf.org/html/rfc6749#section-4.4])), a user application can exchange a **Client ID**, and **Client Secret** for a short lived Access Token. In this scenario, the **Client ID** and **Client Secret** pair are the **Shared Secret** which would be passed to the integrating developer to configure in their application. In the OpenID Connect (OIDC) protocol, this happens by making a request to the **/oauth/token** endpoint. ```sh TOKEN=$(curl --header "Content-Type: application/x-www-form-urlencoded" \ --request POST \ --data "grant_type=client_credentials" \ --data "client_id=CLIENT_ID" \ --data "client_secret=CLIENT_SECRET" \ https://auth.example.com/oauth/token | jq -r '.access_token') curl --header "Authorization Bearer $TOKEN" \ https://api.example.com/v1/endpoint ``` * **Time to API Call**: Slow. * **Ease of API Consumer Integration**: Difficult * **Ease of API Producer Implementation**: Difficult * **Other Considerations**: * Can enable additional use-cases, such as granting third party systems the capability to make API calls on behalf of your users. ### Public/Private Key Pairs A Public Private Key Pair allows for a user to hold a secret and the server to validate that the user holds a secret, without the server ever holding the secret. For the purpose of authenticating users, this mechanism has the lowest attack surface : i.e. it is the most secure. ```mermaid sequenceDiagram participant API Consumer[Bob] participant API participant Authorization Server rect rgba(0, 0, 0, 0.05) note over API Consumer[Bob],Authorization Server: Configure Key API Consumer[Bob]-->>API: AUTHORIZED SESSION [Bob] with [API] API-->>API Consumer[Bob]: Note right of API Consumer[Bob]: Generate Public/Private Key Pair API Consumer[Bob]->>API: Send Public Key API->>Authorization Server: Store [Bob, [Public Key]] Authorization Server-->>API: Success API-->>API Consumer[Bob]: Success end rect rgba(0, 0, 0, 0.05) note over API Consumer[Bob],Authorization Server: Authorized API Request API Consumer[Bob]-->>API: SECURE SESSION [Unknown] with [API] API-->>API Consumer[Bob]: API Consumer[Bob]->>Authorization Server: Hello, I am Bob Note left of Authorization Server: Generate Random Number [NONCE] Authorization Server-->>API Consumer[Bob]: Hello Bob, please sign [NONCE] Note right of API Consumer[Bob]: Sign [NONCE] with Private Key as [PROOF] API Consumer[Bob]->>Authorization Server: [PROOF] Note left of Authorization Server: Validate [NONCE] signature using [Public Key[Bob]] Authorization Server-->>API Consumer[Bob]: Success. [Session Token] API Consumer[Bob]-->>API: AUTHORIZED SESSION [Bob] with [API] via [Session Token] API-->>API Consumer[Bob]: end ``` The cost and complexity of building and maintaining a Public/Private Key Authentication mechanism, without exposing the Private Key, opening up replay attacks, or making a mistake in implementation somewhere can be high. If you're selling an API to multiple consumers, it's unlikely that it will be as trivial as the following `curl` to invoke the API that you want; as the integrating system will need to understand the protocol you choose. There are also complexities regarding the certificate lifecycle, and the need to either manage certificate rotation, or pin (hardcode) each certificate into the system. ```sh # this can usually be configured by some API Gateway products in infrastructure configuration. curl --cacert ca.crt \ --key client.key \ --cert client.crt \ https://api.example.com/v1/endpoint ``` * **Time to API Call**: Slow. * **Ease of API Consumer Integration**: Difficult * **Ease of API Producer Implementation**: Difficult * **Other Considerations**: * Severity of the public key being leaked is functionally zero -- no intermediary system holds enough data to make/replay requests except the original sender. ### Signed Tokens as API Keys A Signed Token is secret; in the same way that a Shared Secret is secret. However, due to standardization of technologies, it is starting to become commonplace to use long-lived Signed Tokens, in the form of JWTs (JSON Web Tokens), as API Keys. This enables the following pattern: ```sh curl --header "Authorization Bearer ${API_KEY}"\ http://api.example.com/v1/endpoint ``` ```mermaid sequenceDiagram participant API Consumer[Bob] participant API participant Authorization Server rect rgba(0, 0, 0, 0.05) note over API Consumer[Bob],Authorization Server: Configure Key API Consumer[Bob]-->>API: AUTHORIZED SESSION [Bob] with [API] API-->>API Consumer[Bob]: API Consumer[Bob]->>Authorization Server: Request New Secret Key Note left of Authorization Server: Make JWT[Secret Key] with Signing Key [K] via:

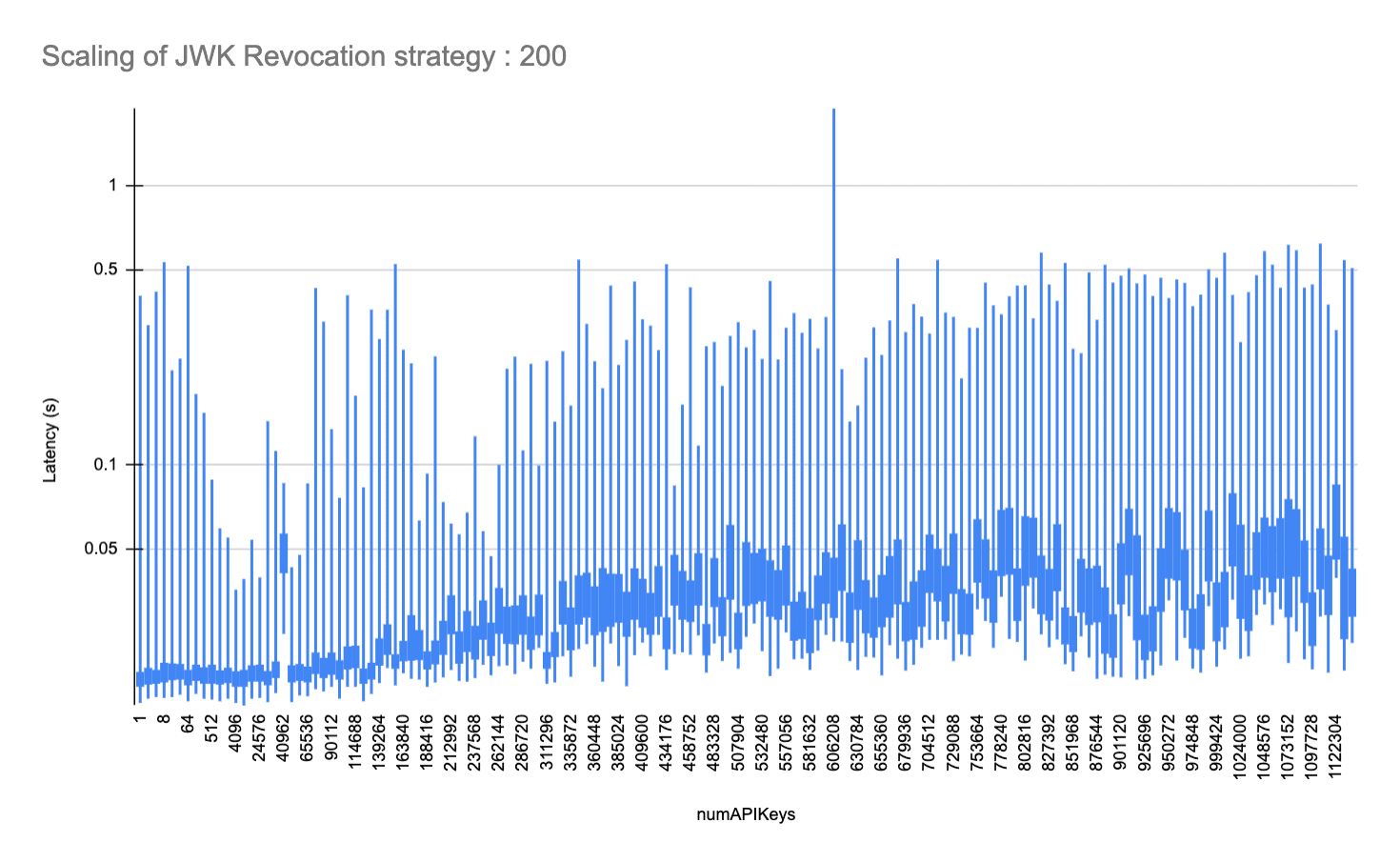

SIGN({"sub": Bob, "exp": 3 months}, K). Authorization Server-->>API Consumer[Bob]: [Secret Key] end rect rgba(0, 0, 0, 0.05) note over API Consumer[Bob],Authorization Server: Authorized API Request API Consumer[Bob]-->>API: SECURE SESSION [Unknown] with [API] API-->>API Consumer[Bob]: API Consumer[Bob]->>API: [Req] with header "Authorization Bearer \${JWT["Bob"]} API->>Authorization Server: Check JWT["Bob"] Note left of Authorization Server: Validate JWT[Secret Key] with Signing Key [K] Authorization Server-->>API: Success Note left of API: Process Request under context Bob API-->>API Consumer[Bob]: [Resp] end ``` * **Time to API Call**: Fast. * **Ease of API Consumer Integration**: Simple * **Ease of API Producer Implementation**: Simple * **Other Considerations**: * Difficult to revoke tokens: either a whitelist/blacklist is used (in which case, little advantage exists over shared secrets), or the signing key must be rotated. * Easy to add complexity through custom claims into the token, which can lead to complexity migrating tokens into a new form. * Easy to block requests from reaching application servers through an API Gateway and Asymmetric Keys (split into Private JWTs and Public JWKs). ### Our Approach: Signed Tokens as API Keys, but 1-Signing-Key-Per-API-key There are 3 problems with the Signed Tokens as API Keys pattern: 1. Revoking a key is hard: it is very difficult for users to revoke their own keys on an internal compromise. 2. Any compromise of the Signing Key is a compromise of all keys. 3. API Gateways can't usually hook into a whitelist/blacklist of tokens. To tackle these, we can do three things: 1. Use Asymmetrically Signed JWTs, storing and exposing a set of Public Keys via a JWKS (JSON Web Key Set) URI. 2. Sign each Token with a different Signing Key; burn the Signing Key immediately afterwards. 3. Ensure that API Gateways only retain a short-lived cache of JWKS (the Public Key to each Signing Key). ```mermaid sequenceDiagram participant API Consumer[Bob] participant Authorization Server participant API rect rgba(0, 0, 0, 0.05) note over API Consumer[Bob],API: Configure Key API Consumer[Bob]-->>Authorization Server: AUTHORIZED SESSION [Bob] with [Authorization Server] Authorization Server-->>API Consumer[Bob]: API Consumer[Bob]->>Authorization Server: Request New Secret Key [K] Note left of Authorization Server: Create a new Signing Key Pair [K]

(e.g. EdDSA Certificate with High Entropy)

Sign [Bob] with [K["PrivateKey"]] as [Secret Key]

Store [Bob, K["PublicKey"]]

Burn K["Private Key"] Authorization Server-->>API Consumer[Bob]: [Secret Key] end rect rgba(0, 0, 0, 0.05) note over API Consumer[Bob],API: Authorized API Request API Consumer[Bob]-->>Authorization Server: SECURE SESSION [Unknown] with [Authorization Server] Authorization Server-->>API Consumer[Bob]: API Consumer[Bob]->>Authorization Server: [Req] with header "Authorization Bearer \${JWT["Bob"]}" Note left of Authorization Server: Decode["Secret Key"] into JWT

Lookup Bob["Public Key"] via JWT.KID

Validate ["Secret Key"] matches Public Key["KID"] Authorization Server->>API: [Req], [Bob] Note left of API: Process Request under context Bob API-->>Authorization Server: [Resp] Authorization Server-->>API Consumer[Bob]: [Resp] end ``` This gives us a flow that's very similar to a shared secret, but with the advantage that: * Revoking a key is easy: the Public Key just needs to be removed from the JWKS and caches invalidated. * There is no Signing Key to compromise ; all Authorization Server state can be public. With the tradeoff: * Any API Gateway that validates a JWT must be able to regularly fetch JWKS (and cache them) from the Authorization Server. * After creating/revoking a key, there will be a short time delay (we generally configure this to be 15 seconds) whilst the key propagates into all API Gateway JWK caches. * More compute is required when constructing the Initial API Key; due to the generation of a public/private key pair. * You need to give the API Gateway application cluster slightly more memory to keep all Public Keys (1 per API key) in memory. #### In Practice: Envoy / Google Endpoints / EspV2 Envoy is a proxy server that is used to route traffic to a backend service. We ran extensive tests with ESPV2 (Envoy Service Proxy V2) and Google Endpoints, and found/validated the following performance characteristics as the number of keys increased. ```yaml # Espv2 is configured with an OpenAPI specification to use an external JWKs URI to validate incoming API Keys securityDefinitions: speakeasy_api_key: authorizationUrl: "" flow: "implicit" type: "oauth2" x-google-issuer: "https://app.speakeasy.com/v1/auth/oauth/{your-speakeasy-workspace-id}" x-google-jwks_uri: "https://app.speakeasy.com/v1/auth/oauth/{your-speakeasy-workspace-id}/.well-known/jwks.json" x-google-audiences: "acme-company" ``` We ran benchmarks of up to 1138688 API keys, structured as public/private 2048-bit RSA keypairs with a single RS256 Signed JWT-per-JWK, using a 8GiB Cloud Run managed Espv2 instance. At this key size and at ~100 requests/second, we observed a peak of ~37% utilization of memory, with ~7% utilized at no API keys. This implies an 8GiB EspV2 instance should scale to ~3M API Keys at this request rate. This also implies that this mechanism will scale with Envoy RAM with a minor deviation in maximum latency, to the degree of ~3.5ms additional maximum request latency every 8192 API keys. The average additional latency introduced by large numbers of API keys is affected to a much lesser degree, ~0.2ms every 8192 API Keys.  Given most API Applications have less than 3M API Keys active at any given time, this approach, in our belief, combines the best of both worlds: Public/Private Key Crypto, with the ease of an API Key. # api-change-detection-open-enums Source: https://speakeasy.com/blog/api-change-detection-open-enums import { Callout, ReactPlayer } from "@/lib/mdx/components"; Two exciting new features are coming to Speakeasy this week: API Change Detection and Open Enum support. Let's get into it 👇

If you're looking for a more comprehensive guide to API design, you can read our REST API Design Guide.

Order 123

Status: Shipped