API Advice

Designing agent experience: A practical guide for the era of AX

Nolan Sullivan

July 23, 2025 - 11 min read

A recent Gartner survey reveals 85% of support teams considering conversational AI in 2025

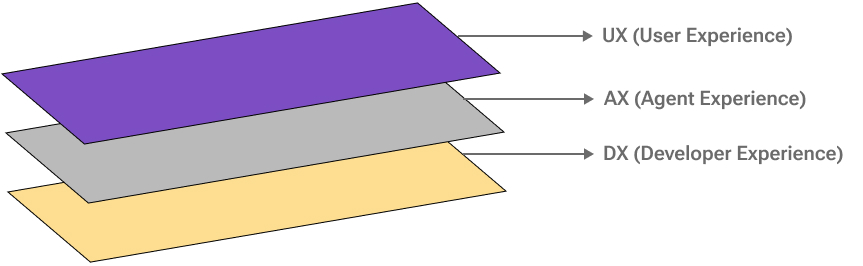

First we optimized for user experience (UX), hiding complexity behind elegant interfaces, ergonomic flows, and delightful visuals. Then we learned to care about developer experience (DX), prioritizing tooling, documentation, APIs, and SDKs that make integration frictionless.

Today, AI agents are no longer hidden tools. They answer support requests, act on user input, trigger system workflows, and can function autonomously on tasks for days. But unlike humans, agents can’t improvise. To function without human intervention, agents need clear tools, documentation, permissions, and fallback paths.

This is agent experience (AX), a concept introduced in early 2025 by Mathias Biilmann, CEO of Netlify

This post unpacks the core principles of AX, how they relate to UX and DX, and practical implementation strategies.

AX at the intersection of UX and DX

Agent experience (AX) bridges user experience (UX)

The UX layer: Designing for human expectations

When agents interact with users, AX must prioritize human-centered design principles.

- Predictability: Users need to trust that agents will behave consistently every time. If your agent can create a Trello card from a specific instruction, it should succeed whether it’s asked once or a hundred times.

- Transparency: Users should know they are interacting with an agent, not a human. Agents should introduce themselves and clearly state their capabilities. This avoids confusion and unrealistic expectations.

- Coherence: Agents must follow the natural flow of tasks and match user expectations. For example, booking a flight should include a confirmation email. Completing a booking without following up leaves users uncertain and frustrated.

The DX layer: Building for agent autonomy

While user-facing AX principles focus on interaction design and user flows, system-facing principles are about infrastructure, tooling, and system architecture.

- Toolability: Expose only the tools agents actually need, with clear documentation and proper scoping. Don’t overwhelm a sales-focused agent with 300 operations across all departments — scope tools by domain and restrict access to relevant endpoints only.

- Recoverability: Agents must handle failures gracefully with retry logic, alternate paths, and user communication. For example, “That card didn’t go through. Want to try another one or switch to PayPal?”

- Traceability: Track what agents do, when, and why using headers like

X-Agent-Request: trueandX-Agent-Name. Without this, debugging becomes guesswork, and you can’t distinguish agent actions from human actions in logs.

Putting AX principles into practice

Implementing AX comes down to clear, actionable practices you can apply to your development workflow, tooling, and API design. Let’s see how this works in practice.

Design prompts for reliable agent behavior

Prompts define how agents behave and are critical to their autonomy. The best tools, APIs, and documentation won’t save your product if the prompt doesn’t define the right intent, scope, or fallback behavior.

Let’s say you’re building a custom service agent. Here’s an example of a prompt that won’t work:

You are a customer assistant. Help users get refunds by checking the status on `/orders` and `/refunds`.This prompt fails because:

- It doesn’t define scope: The agent doesn’t know which APIs or tools it can use.

- It doesn’t specify criteria: There are no rules to decide when a refund is allowed.

- It doesn’t provide format: The agent has no guidance on how to structure its responses.

- It doesn’t include fallback: There’s no plan for what to do if the refund attempt fails.

Let’s revise the prompt:

You are a refund-processing agent for an e-commerce site.

When a user asks for a refund:

1. Greet the customer.

2. Ask them for their order ID and confirm it matches the format: `ord_XXXXXX`.

3. Call the `/orders/{id}` endpoint to check if the order exists and is in `delivered` status.

4. If eligible, use the `/refunds` endpoint with the provided amount and reason.

5. If the refund fails (e.g. 403 or 500), respond with:

“I couldn't process that refund. Please contact support or try again later.”

This revised prompt works because:

- Scope is defined: The agent is limited to using the

/ordersand/refundsendpoints. - Criteria are clear: The agent only proceeds if the order status is

delivered. - Format is enforced: The agent knows what steps to follow and what output to generate.

- Fallback is handled: The agent informs the user if the refund fails and offers an alternate path.

Create documentation for LLMs, not humans

While a human can infer context from a sentence or connect steps based on intuition, agents need clear instructions about what to call, when, and in what order. While this might result in more verbose documentation, it’s necessary to enable autonomy.

Take this example. Your API requires an address to be set on a cart before validating it. You’ve shown this flow in a tutorial for developers, which is enough for a human to follow. But your agent reads only the endpoint descriptions. Here’s what the current description looks like:

paths:

/cart/{cartId}/billing-address:

put:

summary: Set the billing address for the cart.

description: |

This endpoint assigns a billing address to the given cart.

parameters:

- name: cartId

in: path

required: true

schema:

type: string

requestBody:

required: true

content:

application/json:

schema:

$ref: '#/components/schemas/Address'

responses:

'200':

description: Billing address successfully added.

'400':

description: Invalid address payload.This description is not useful for agents as it doesn’t provide the necessary context.

- When should this endpoint be called in the workflow?

- What happens if the cart already has a billing address?

- What format requirements must be met to avoid errors?

Agent-focused documentation should look like this:

paths:

/cart/{cartId}/billing-address:

put:

summary: Set the billing address for the cart.

description: |

This endpoint assigns a billing address to the given cart.

It must be called before the cart can proceed to checkout, as a valid billing address is required for order validation and payment processing.

Agents:

- Use this operation after the cart has been created but before checkout validation.

- If the cart already has a billing address, this call will overwrite it.

- Ensure the address format complies with the schema to avoid validation errors.

parameters:

- name: cartId

in: path

required: true

schema:

type: string

requestBody:

required: true

content:

application/json:

schema:

$ref: '#/components/schemas/Address'

responses:

'200':

description: Billing address successfully added.

'400':

description: Invalid address payload.This extended documentation helps agents choose the right endpoints for their tasks. However, adding agent-specific descriptions to an OpenAPI document will result in agent-focused text appearing in documentation for humans when rendered by tools like Swagger UI and Redoc.

The x-speakeasy-mcp extension in Speakeasy’s MCP server generation addresses this challenge directly. The extension lets you include agent-specific operation names, detailed workflow descriptions, and scopes directly in your OpenAPI document. Since documentation renderers like Swagger UI don’t recognize custom extensions, they ignore this agent-focused content. Your human-readable documentation stays clean while agents get the detailed context they need.

paths:

/cart/{cartId}/billing-address:

put:

operationId: setBillingAddress

tags: "AI & MCP"

summary: Set billing address for cart

description: API endpoint for assigning a billing address to a cart before checkout.

x-speakeasy-mcp:

disabled: false

name: set-billing-address

scopes: [cart, write]

description: |

Adds or updates the billing address associated with the cart.

- Use this operation after the cart has been created.

- Ensure this step is completed before attempting to validate or checkout the cart.

- Provide all mandatory address fields to avoid validation failures.

- If the billing address already exists, this call will replace it.

- This operation has no side effects besides updating the billing address.

parameters:

- name: cartId

in: path

required: true

schema:

type: string

requestBody:

required: true

content:

application/json:

schema:

$ref: '#/components/schemas/Address'

responses:

'200':

description: Billing address successfully added.

'400':

description: Invalid address payload.Allow agents to build SDKs and manage API keys

Just as API documentation must be tailored for agents to act autonomously, so must SDKs. The quality of the SDK matters, including the defined types, the README.md, and the docstrings on the methods, classes, or objects.

Agents may be designed to write or complete their own SDKs when needed. In this context, the best SDK for an agent is often the API documentation itself. A well-crafted and precise OpenAPI document is often more valuable to an agent than any wrapper code, because it offers direct, structured access to the service.

For APIs that require authentication, agents need clear instructions on how to handle secured requests, specifically how to:

- Create new API keys or tokens for authentication.

- Refresh tokens or keys when they expire.

- Handle authentication failures gracefully and know when to retry or escalate.

Without this level of clarity, an agent cannot reliably access or interact with secured APIs.

Control access with tool curation and role scopes

Let’s say you’ve built an e-commerce agent to help customers search for products and add them to their cart. The agent should not be able to request credit card details or complete the checkout. Agents’ capabilities must be scoped tightly to their intended purpose.

Because agents can perform powerful and wide-ranging actions, security is a core pillar of AX. Poorly designed access controls can lead to data leaks, unintended behavior, or even dangerous operations.

Following the AX principle of toolability, the most effective approach to minimizing these risks is through the tools you expose to the agent. For example, to restrict an agent to product search, expose just read-only product operations in the OpenAPI document.

You can curate tools manually or automatically.

- Manual curation involves directly editing an OpenAPI document to remove unwanted operations.

- Automated curation uses automated tooling to generate a curated document based on predefined scopes.

Speakeasy supports automated tool curation using the x-speakeasy-mcp extension, which lets you define scopes that the MCP server generator understands and uses to generate tools accordingly.

For example, you can define scopes (like read and products) in your full OpenAPI document, and the Speakeasy MCP server generator will automatically create a curated toolset that includes only the operations matching your specified scopes.

paths:

/products:

get:

operationId: listProducts

tags: [products]

summary: List products

description: API endpoint for retrieving a list of products from the CMS

x-speakeasy-mcp:

disabled: false

name: list-products

scopes: [read, products]

description: |

Retrieves a list of available products from the CMS. Supports filtering and pagination

if configured. This endpoint is read-only and does not modify any data.When you start the server in read-only mode, only read operations will be made available as tools.

{

"mcpServers": {

"MyAPI": {

"command": "npx",

"args": ["your-npm-package@latest", "start"]

"env": {

"API_TOKEN": "your-api-token-here"

}

}

}

}You can further scope operations by specifying additional scopes like products if you need to.

Tool curation controls what agents can see, but you also need to control what they can do with those tools. Use role-based access control to limit agents to only the permissions they need.

In our e-commerce example, you can limit agent permissions in a system like this:

ROLE_PERMISSIONS = {

"agent": {"search_products", "add_to_cart"},

"admin": {"search_products", "add_to_cart", "checkout", "manage_users"},

"user": {"search_products", "add_to_cart", "checkout"}

}

# Simulated agent request

class Agent:

def __init__(self, role: str):

self.role = role

self.permissions = ROLE_PERMISSIONS.get(role, set())

def can(self, action: str) -> bool:

return action in self.permissionsLog and audit every agent action

Logging and auditing are essential to AX design. When agents make mistakes, you need to distinguish their actions from actions taken by humans. Include metadata fields or custom headers in every agent-generated request to clearly flag the source.

import requests

# Example request made by an AI agent

headers = {

"Content-Type": "application/json",

"X-Agent-Request": "true", # Custom header to flag the request as agent-initiated

"X-Agent-Name": "product-recommender-v1" # Include the agent name or version

}

payload = {

"query": "search term",

"filters": ["in-stock", "category:electronics"]

}

response = requests.post("https://api.example.com/search", json=payload, headers=headers)

if response.ok:

print("Agent request succeeded:", response.json())

else:

print("Request failed with status:", response.status_code)AI observability and monitoring platforms like Langfuse

Five steps to better AX

As AI agents move from novelty to infrastructure, AX determines whether they deliver reliable automation or frustrated users. To start implementing great AX without complicating things:

- Write endpoint docs for agents. Explain when to call, what it does, and what follows.

- Scope tools tightly. Don’t expose your entire API, just what the agent truly needs.

- Include tracing headers like

X-Agent-Request: trueto trace and debug agent actions. - Design prompts with clear goals, inputs, validation steps, and fallbacks.

- Build for failure. Retries, alternatives, and user-facing messages must be part of the flow.