How Polar reinvented product onboarding with AI agents

Polar

FinTech

Gram

Speakeasy

Polar

When the Model Context Protocol (MCP) was announced, Polar’s engineering team faced a familiar challenge: how do you balance building core product with exploring emerging technologies when you’re a small team?

“When you're a small team, you're always balancing product development with exploration. We weren't sure initially exactly how we would use MCP, but with Speakeasy we could enable it so easily. We thought, why not? Let's just spin it up and figure out how it can work for our customers later. That's the luxury of working with tools that don't require massive upfront investment.”

Pieter Beulque,

Polar Engineering Team

In February 2025, Polar launched with a locally hosted MCP server, powered by Speakeasy’s generator. Over the next few months, through observation of their users and their own internal experimentation, they identified three distinct use cases where AI agents connected to their MCP server could have an immediate impact.

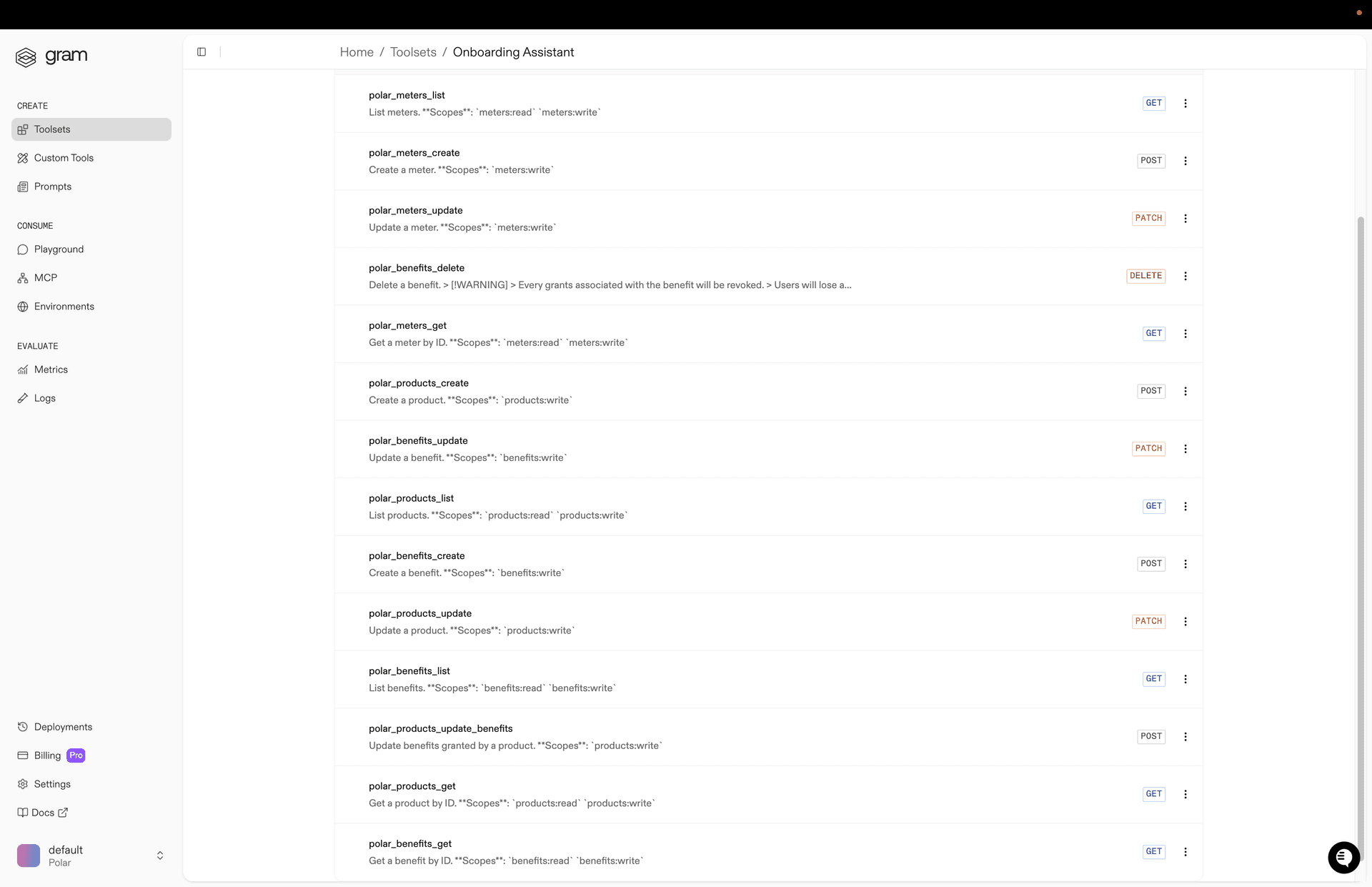

To start bringing those production use cases to life, they built out their remote MCP server using Gram.

Use case 1: AI-assisted onboarding

Even with a developer-friendly API, initializing a payments platform requires understanding pricing models, configuring products correctly, and navigating multiple screens. For developers new to monetization, this complexity can slow down what should be a quick process.

Polar’s traditional onboarding flow followed the standard pattern: new customers sign up through the web app, navigate through forms to configure products and pricing, and then integrate the API into their codebase. It’s functional, but requires understanding payment terminology and making configuration decisions upfront.

With MCP, Polar realized they could flip this experience entirely. Instead of filling out forms, developers could describe their business model in natural language: “I want to charge $10 per month for my API, with usage-based pricing for calls over 1,000.” Polar’s AI agent would handle creating the appropriate products, setting up pricing tiers, and configuring entitlements automatically.

Use case 2: Natural language analytics

One of the most common reasons users check their payment dashboard is to answer simple questions: “What did I sell most this month?” or “Who was my biggest revenue customer?” These questions typically require navigating analytics dashboards, filtering data, and sometimes writing custom queries.

Polar’s MCP server will enable a more direct approach. Users can ask questions in natural language, and the AI agent interacts with Polar’s MCP to provide answers with derived analytics.

“We think there's an opportunity to replace SQL with natural language for +80% of reporting Give the LLM pure numbers and output in JSON, and it can do all these derived analytics.”

Pieter Beulque,

Polar Engineering Team

Use case 3: Operational automation through agents

The most ambitious use case involves handling common, but tedious, day-to-day merchant operations through AI agents. Processing refunds, managing subscriptions, and responding to customer issues could all happen through natural language commands rather than manual dashboard interactions.

In an ideal world when a complaint comes in, a merchant can just say, ‘refund 50% of what this emailer has paid us.’ The Polar agent figures out the customer, finds their purchases, and handles the refunding logic.

No more manually looking up orders, calculating amounts, and executing refunds through multiple steps. With an MCP-powered agent, the entire workflow collapses into a single natural language instruction.

Why Gram made sense

For a small team, building an MCP server from scratch would mean weeks spent on protocol implementation, authentication flows, and ongoing maintenance – all before delivering any value to customers.

“I did consider building the MCP server in-house for five minutes, but then realized it would be a productivity black hole. MCP is still really new and the supporting tooling is still maturing. With Gram, I don't need to worry about any of that, I can focus on the core product.”

Pieter Beulque,

Polar Engineering Team

Gram’s automatic generation from Polar’s API meant they could skip the infrastructure work entirely. The team built their AI-assisted onboarding experience in less than a week. The architecture was elegantly simple: Vercel’s AI SDK

What Gram enabled

Polar shipped their first AI-powered use case to production in under a week, validating the concept before committing significant engineering resources. As their APIs evolve, Gram automatically updates the MCP server without manual intervention, eliminating what would otherwise be an ongoing maintenance burden.

While automatic generation from their OpenAPI spec covers Polar’s entire API surface area, Gram’s custom tool builder provides flexibility when needed. For complex workflows like refund processing that require specialized business logic, Polar can create hand-crafted tools that encapsulate the full operation into a simple, natural language command.

Looking toward an AI-integrated future

The partnership with Speakeasy has allowed Polar to pioneer AI-powered payment workflows without diverting engineering resources from their core product. As they expand the analytics and operational automation use cases based on real usage patterns, the decision to build MCP with Gram continues to pay dividends.

“The collaboration with the Speakeasy team has been great. The response times are always super fast and we're really happy with that.”

Emil Widlund,

Polar Engineering Team

The Polar team is particularly excited about Gram’s upcoming observability features. Once available, they’ll be able to see which tools get called and where users experience friction, providing data-driven insights into which use cases resonate most. This will help them prioritize future investment: double down on the onboarding flow, or expand the analytics and operational automation capabilities?

For developer tools companies exploring AI integration, Polar’s approach offers a clear roadmap: identify use cases through exploration, leverage specialist infrastructure to ship quickly, and invest engineering resources where they create the most differentiated value. Sometimes the fastest path to innovation is knowing what not to build yourself.